A little while ago, I posted on the subject of risk assessment in education, and how educators, like politicians, reporters, and all other humans, are just terrible at it. Among the issues I mentioned in the post was the subject of school shootings. Here is the relevant paragraph:

To my knowledge, there have only ever been ten acts of gun violence in Canadian schools since 1902. The total death toll was 26, more than half of which came from a single incident at the École Polytechnique in Montréal. One came from a school in Alberta where a friend of mine was teaching, eight days after the Columbine case in the U.S. If you estimate the total number of students in Canadian schools since 1902 (hard to tell: there are 5.2 million kids in school today, NOT counting universities and colleges; multiply that by 110 years and skim a bunch off for the smaller population in previous generations….you still get several hundreds of millions), and figure those 26 unfortunate people into that number, the chances of dying in a school shooting in Canada are too small for my calculator to measure without an error message. But every year, we now have to suffer through “Lockdown Drills”, officiated by the police, where we all have to pretend there’s a maniac in the halls. Time is wasted, kids are frightened, and money is spent for no good cause. Remember, all violent crime is on the DEcrease, very dramatically. Polls show that children’s safety at school is the single most common crime-related concern, and yet the school environment is statistically, indisputably, the safest place for kids – much safer than the home or the street.

I argued this at the school I work at, with the result that I got a lot of flak from colleagues who either a) took issue with me questioning lockdown drills, which they regarded uncritically as a clear benefit to our society, or b) took issue with me “wasting time” by thinking critically about policies that affect us all. Out of this somewhat one-sided discussion came a number of interesting points that I thought warranted a separate post. I also emailed Lenore Skenazy, the author of Free Range Kids, and she put the question to the readers of her blog, who repeated a number of questions and assumptions made by my colleagues, that to my mind are missing the point, since they are framed by the assumption that lockdown drills are the only real response to the threat of gun violence at schools. I take issue with those assumptions, and I’ll try to explain why here.

1. Likelihood of Danger

First of all, I’m not convinced that the threat of a school shooting is severe enough to warrant this kind of attention. I totally understand the perceived reason for lockdowns. I do. I really get the fear that comes with kids, and guns, and the potential for disaster and loss. God forbid that anything should happen, as we all say.

That said, here are some numbers that as far as I can tell are correct:

1. There have been ten incidents of gun violence in Canadian schools over the last 100 years.

2. The total casualty number is 26. More than half of those were at Polytechnique, in 1989. This is a date that comes before the dramatic statistical drop in incidents of violence after the 1990s.

3. The stranger-as-gunman situation is not the norm.

4. There are about 5.6 Million Canadian students below the postsecondary level right now.

5. That gives us a pool of tens (hundreds?) of millions of students, a century of recorded time, and 26 casualties, which statistically gives us such a tiny risk that it is treated as zero. “De minimis” is the term.

6. Compare this (for example) to the possibility of dying in a car crash: 1 in 6000, approximately. Driving to school is thousands or even millions of times more dangerous – and a real risk – than the vanishingly small chance of a shooting in school.

I can say with confidence – way more confidence than I could discuss even safety from shark attack – that a school shooting will not happen here. In fact, there is nothing in my life that I can say for certain will never happen, but this is about as close as it comes. I cannot have that same confidence that students will survive their car ride home. We say that we are preparing for something that might happen: but the list of things that might happen is long, and we can’t work that way. We have to work with what realistically has a probability of happening. We are actually preparing for something that statistically will not happen. So, the reality is that we are doing this. Things we do have real impacts — more so than things that will not happen.

Even if we do accept that school shootings are rare, people sometimes argue that lockdowns are useful in response to incidents of violence in schools other than school shootings, such as knives being brandished, etc. One of my colleages mentioned his experience of such incidents in support of this theory. But again, these incidents are extremely rare and getting more rare. ALL violent incidents are dramatically down, inside schools and outside. I think the availability heuristic might be at work here. Just because we can recall an incident to mind does not mean that it is actually more likely to happen.

People also mention the potential usefulness of a lockdown in the case of a bomb threat. Has there ever been an incident involving an actual bomb at a school? I can’t remember hearing of one. There are plenty of threats; in fact, the school I teach at suffered a rash of them recently once some students found out that our reaction to such a threat (school-wide lockdown or evacuation) was so extreme and disruptive. If you want to disturb shit, get attention and disrupt classes, what could be better? It’s the go-to strategy of the sociopaths among our student population. We’ve had way more fake bomb threats based on the understanding that we will react dramatically than we have had real threats.

Strangers in the building? Recently a stranger came into our school . He entered, went to the bathroom, and left. We went into lockdown, and though our principal calmly soothed fears by telling students over the P.A. when it was over that he had done no harm, I asked myself why on earth that it would be assumed that a member of the society we all live in would automatically have dastardly intentions. He probably had to pee. Why would we assume the worst, all the time? This says way more about us, in my opinion, than about “strangers”.

As Mark Twain said, “The trouble with the world is not that people know too little, but that they know so many things that ain’t so.”

2. Cost / Benefit Analyses

People have said that the costs of NOT having drills might be very high, whereas the costs of doing them is nil, aside from some time lost. The problem with the typical cost-benefit analysis of a lockdown drill (aside from not factoring in the real monetary costs of having the police at the school for several hours while more than a hundred staff members are sitting in the dark, not teaching) is again that we don’t know if it’s true. Do these drills in fact help to reduce cost in terms of human life? What is their cost in terms of quality of life? Where are the studies on this? I understand that we’re mandated to do these, but I would really like to wonder about the resources allocated to such things.

In addition, could we think about the perceived potential benefits of performing lockdowns, compared to the real effects of actually doing them? We might think about possible social repercussions of normalising paranoia (that’s what it is; it’s not a realistic risk), and the anxiety that we produce. I also wonder how many other, more pertinent risks we are ignoring. Why is First Aid not a priority, for example? In explanations I’ve read for the mandated 2-a-year lockdowns, fear of litigation is the most prominent reason given. What if something happened, and we hadn’t been seen to “do something”? My fear is that our response is like the one from “Yes Minister”: in syllogistic format:

a) Something must be done.

b) This is something.

c) Therefore, this must be done.

Could time and money be spent on stopping the bullying that some people claim produces school shootings? Or on building community? Are we reacting emotionally (or politically) to irrational fears — in other words to symptoms? How can we stop doing this and get to the root of the issues which (rarely) produce problems? I don’t have the answers, but it seems to me like we might not be asking very good questions.

The social costs, on the other hand, seem to be real. “Lockdown” is a term that had its origins in jails. Now it’s common parlance in schools, where we are MORE safe than ever. Just look at these figures:

a) In the U.S., which has ten times the number of students we do (and better statistics; hence my use of data from south of the border), the rate of “serious violent crime” in 2004 was 4 per 1000 students. That’s down to less than 1/3 of what it was in 1994.

b) In the U.S., in 1997-1998, at the height of the statistically anomalous spike in violence during the 1990s, the average student had a 0.00006 % chance of being murdered at school. That’s 1 in 1, 529, 412. And the risk has shrunk a lot since then.

c) Studies that I have read indicate that the kind of lockdown drills that we do, where kids are sitting or lying on the floor, are the least effective and most anxiety-raising. Aside from the godawful lockdowns that happen in the States where people actually roleplay shooters and bloodied victims, they are the worst.

d) Remember that the risk of your child being a victim of a school shooting is effectively zero. But in 1997, a poll showed that 71% of Americans said it was “likely or very likely” that a school shooting would happen in their community. One month after Columbine, 52% of parents feared for their kids’ safety at school; 5 months later this was unchanged. Why are we allowing public policy to be decided when the data and people’s fears are so unbalanced?

e) Significantly, media “feedback loops” continue. Here at home, do you remember the “anniversary” episodes of both Polytechnique and (for a whole WEEK!) 9/11 on the local news? Even the normally moderately sane CBC was guilty of this.

f) Politicians also ought to make it clear that schools are safe. Instead, they don’t take the political risk of appearing not to take the “problem” seriously. Once again, schools are safe.

g) In the U.S., vast amounts of money are spent on metal detectors, police presence, and other invasive security measures that drain monies away from books and events at school. It also creates an oppressive atmosphere, which is unnecessary since less than 6% of students are reported to carry weapons of any kind (even pen knives) to school. Fewer still would use them in a violent manner.

h) The adoption of “zero tolerance” policies towards violence actually was found to INCREASE bad behaviour and dropout rates. The APA called for them to be dropped.

i) Studies also show that schools operate best when they are connected to the community in strong ways. Treating all strangers as homicidal maniacs does not seem to strengthen community.

j) 1 in 5 parents report “frequently” worrying that their child will come to harm at school. Another 1 in 5 worry ‘occasionally’.

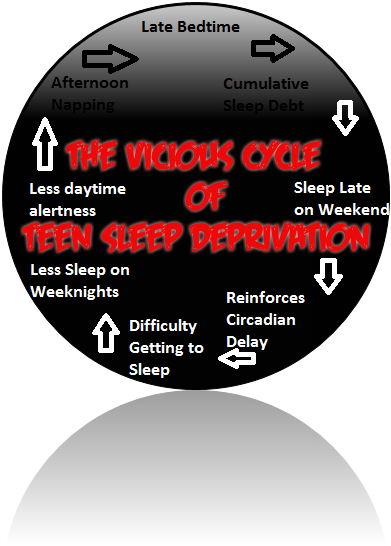

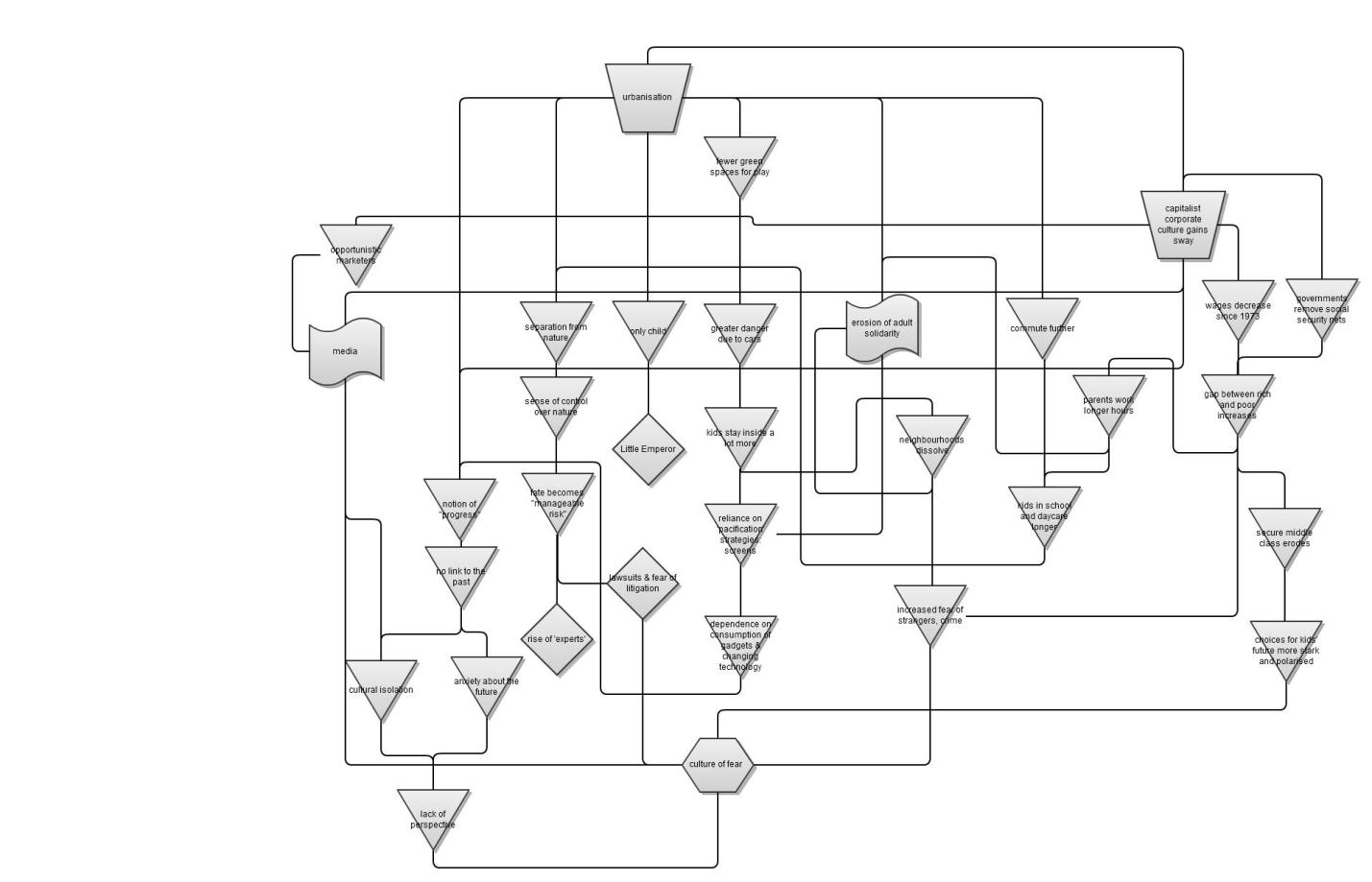

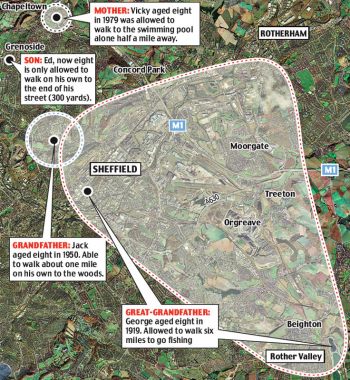

k) In the U.K., parents are so anxious when their kids leave the house that in 2004, a poll found that 2/3 of parents experience anxiety WHENEVER their kids are outside the home. 1/3 of kids NEVER GO OUT ALONE. The result is that almost half of British kids stare at screens for more than 3 hours a day. Child welfare likened it to being raised like “battery chickens”. As far as I can tell, there has been only one school shooting in the U.K., in Scotland in 1996. It took a total of three minutes from start to finish, and I am unsure of the efficacy of a lockdown situation there.

l) In 2007, a group of 270 child psychologists from across the Commonwealth and U.S. wrote an open letter in a British newspaper, declaring that parental anxiety over “stranger danger” may be behind “an explosion in children’s diagnosable mental health problems”. They advocated a return to unstructured unsupervised play as part of a remedy.

m) The usual “better safe than sorry” also doesn’t take into account the prolonged angst that comes with feelings of living in a dangerous environment. This we do know causes feelings of helplessness, listlesness, and depression, all of which negatively affect learning. This should come into the equation somewhere if we’re serious about education.

3. Emergency Preparedness

People say that it’s best to be prepared. Okay. That’s a good enough sound byte that the Boy Scouts use it as a motto. But prepared for what? How? Analysis of the few historical incidents relevant to the discussion seem to indicate that lockdown procedures might not have helped during Columbine or other similar situations. As Dan Gardner points out, we are really, really bad as a species at predicting major events. The Black Swan theory of historical events holds that nearly every significant historical game-changer was unpredicted, and probably unpredictable. That’s part of why they’re so potent. Not knowing where bizarre, unpredictable events will come from is just part of life. We really ought to prepare for things that are statistically likely to happen. Jumping to the worst-case scenario is just feeding the beast. You should see how much money gets allocated to things like “security experts” these days. Their job is to think of the worst, most horrible things that could possibly happen, given egregious circumstances. That’s not really being balanced. And they make money by doing so. These are the people who helped to kill community by teaching kids to scream “stranger danger!” and run away from people in their neighbourhoods, when all good data tells us that they are much more likely to be abused at home. Nobody really predicted the various outré events like 9/11 or Columbine; nothing that happened during those bizarre events matched anything that people had imagined. The amount of money that the TSA makes by groping airline passengers is grotesque, and I’m not convinced it increases security. Cui bono? as they say.

We also don’t know if THIS PARTICULAR reaction to a threat (whether that threat plausibly exists or not) is the most appropriate. This is not the only conceivable reaction to a perceived threat. We don’t know if a lockdown drill does increase safety or minimise anxiety, as is the claim; in fact, the only studies that I’ve read concerning lockdowns have been on the other side of the question, with psychologists questioning whether they increase anxiety, particularly in students who come from high-risk, high-stress backgrounds like war zones, or so forth. Of course we’d all rather be safe than sorry. But does this particular thing make us safe? And are we unsafe from the get-go? From what? Even if we are unsafe, which I don’t yet see evidence for, I want to know as a teacher and a member of society that we’re not just sticking bananas in our ears to keep the tigers away. Remember the duck-and-cover drills kids had to do during the Cold War? We laugh at those now, and I am sure that we will laugh at our own foolishness in the future.

Not only that, but the idea that this kind of lockdown drill is somehow proactive is silly, too, in my opinion. Our model remains a post-facto reaction to something that is clearly already out of control. And it involves the violent intervention of paramilitary actors in the role of police SWAT teams. If there’s one thing we’ve learned about the perception of violence, it’s that it tends to ramp itself up. If you want to be truly proactive, seek out the root of violence and address those issues before they add to the statistics. Not that the statistics are really even worth worrying about. The message ought to be that school is the safest place you can be, where you can send your kids to be statistically multiple times more safe than at home or on the street. We’re acting like we know this lockdown stuff works, and that it’s the only option. In fact, there are no good studies yet to tell us if this is true. To affirm that, even if action of some sort does turn out to be required, it is only this action, and no other, is illogical.

4. Protection of Children

Statistically speaking, the most likely way for a kid to die in North America is because of a car accident. Every single time a kid gets in a car, she has about a 1 in 6000 chance of dying. That’s real. In my opinion, if we want to increase safety, we would focus on the daily carnage that are North American roads. Why are we so blasé about driving? It is actually quite likely to kill our students. I have several times had to bear this bad news to classrooms full of students whose friends have just died in car crashes. This is hypocrisy of the highest order. Do we care about kids’ safety, or do we just care enough to want to look like we’re doing something without dramatically inconveniencing ourselves in the process? We can legislate lockdown drills for things that statistically will not happen, and “let the schools deal with the problem” while we go about our business of driving around in our dangerous cars, killing kids. In fact, our stupendous stupidity at risk assessment has created a situation where more kids are hit by cars driven by parents who are driving their kids to school for fear of safety issues than by anybody else. The irony drips.

So let’s just be clear here again: I’m not anti-safety, and certainly not anti-children (thanks, black-and-white thinkers!). I would like to increase safety by figuring out what that means and how to do it. And I would like to do that without contributing to any corrosion of the society I live in. If we want to really make a huge difference in student safety, we might think about public transportation, for example, which would get them out of cars going to and from school. That would save lives for sure. And how about resources to create anti-bullying campaigns that promote acceptance and even affection between all members of the student body? That would have saved a life in this city recently, where a young man committed suicide after being bullied for being gay. Sadly, more kids kill themselves than each other. In fact, it’s the second most likely way a young person will die, after car crashes. It seems like there’s a danger of complacency when we think of safety in terms of lockdowns, and not focus on deeper matters.

Now, the good news is that the OLD reason for kids dying, i.e. disease, is mostly way down, including things like cancer. Cancer rates are down, cancer in kids is down, and mortality for kids with cancer is down. This should be a good news story. According to Steven Pinker’s newest book, we are living in the least violent, most peaceful, safest, healthiest, longest-lived, most leisured society on Earth since the beginning of humanity. There are fewer wars, they last less long, and take fewer lives, than ever. We should be the least anxiety-ridden people ever to walk the planet. So check it out: we ARE safe. We’re the safest human beings have ever been. Anybody who tells you different may be selling something. Despite our relative Über-safety, though, anxiety levels – particularly amongst teens – are WAY up. We have to try to accept some of the societal blame for this, and for the consequences of teen anxiety and depression, which as I said, is the second most likely cause of death of young people. We’re safe, but we make up fears for ourselves to fill the gap, and pass those fears along to our kids. But we don’t even do that well! Here is a short list of the kinds of things that we could realistically be afraid of, and spend time and resources on, based on statistical danger (again, most of this data is U.S. specific):

The ‘flu still kills 36 000 people annually (the normal kind, not the swine or bird ‘flus, which frightened far more people than they actually killed). Globally, the seasonal ‘flu kills about half a million people every year: the swine ‘flu killed under 20 000, putting its global death toll at less than the normal yearly rate of U.S. seasonal ‘flu death.

68 people are wounded by pens and pencils every year.

According to the U.S. Consumer Product Safety Commission, there were 37 known vending machine fatalities between 1978 and 1995, for an average of 2.18 deaths per year.

3 000 people are injured by chairs at work or school.

2 944 people are injured by desks.

Photocopy machines injure 497.

1 241 people are injured by computers.

Clocks injured 74 people in 2001.

212 people were sent to hospital after encounters with telephones.

73 people are killed every year by lightning.

120 people are injured by toilets DAILY!! (Read Dave Barry’s column or blog for statistics on exploding toilets.)

You have a 1 in 150 000 chance of choking to death every time you eat (not insignificant! But the biological benefits of having your larynx in this awkward position, and therefore giving you the power of speech, outweigh the risk, even at those odds. Nature, at least, seems to understand risk assessment!)

Even if you just sit quietly and do nothing, your chances of dying randomly at any moment are about 1 in 450 000, given the entire population of the U.S., which of course includes the elderly and ill.

Those are some things that we might worry about if we were more rational about risk. Instead, we worry about terrorism, child abduction, and school shootings, which are about as unlikely to kill our kids as sharks. Okay, I know that when kids come into the picture, realistic assessment of risk goes WAY down. That’s not anybody’s fault; it seems to be a common cognitive bias. But come on! Are we adults? Can we not get over this?

5. Lockdowns and Fire Drills

People often compare lockdown drills to fire drills, but I’m not 100% sure of how useful it is to make this comparison. They seem to be many orders of magnitude apart. But it’s a fair question: have many schools burnt down in the last century? I’ve heard of it happening with tragic results; I think a lot of the new fire codes, including mandatory drills, came from such incidents. Fires in general are pretty common, so I don’t know if it’s in the same league. Let’s check the stats:

Good news – deaths by fire have been on the decline for the past several decades, though they’re still the third most common way people die at home. On average in the United States in 2010, someone died in a fire every 169 minutes, and someone was injured every 30 minutes. Only 15% of these fires occurred in non-residential buildings. So no, they’re not on the same scale at all. And I’m afraid that the lockdown drill, although it is modelled on the fire drill, does not work from the same basic assumptions. In a fire drill, you know what the situation is, and there are time-tested methods of dealing with it in an efficient manner by using behaviourist training. Leaving a burning building doesn’t require training, but doing so quickly but in an orderly manner, and overcoming the common instinctive reaction to grab meaningful possessions, means that you have to program anti-intuitive behaviours into people. That’s what drills are for. When I was in the Army, we did drills to try to ingrain habits in us that countered powerful intuitions that, unfortunately, were dangerous. When we smelled gas, we had to be trained to put our own gas masks on before we warned our platoon mates of the danger: something like putting your own oxygen mask on in a plane emergency before helping a child. It’s not intuitive, but it saves lives. In a lockdown, we do not always know what kind of situation this response might address. In such a situation, where there are no parameters, how are we to know that sitting quietly and waiting for police is actually the best strategy?

As to the argument that fire drills and lockdowns reduce panic, there’s no evidence that people run scared when faced with unexpected events. Hollywood has people screaming and running from everything from terrorists to Godzilla, but in real life this does not seem to happen. People sometimes do kind of stupid things in emergencies, but there is not much evidence for panic like many people describe. In the one case of a suspected gun at school that I have experienced, there was no panic, and I stupidly entered the building, thinking I could help somehow (it turned out to be a kid with a toy gun). I was teaching in London during the Underground bombing in 2005, and for nine horrible hours I thought we had lost students. I was dreading calling parents; it was really awful. It turned out that things were so outwardly normal in the city that the kids (who had the day off and were shopping) had no idea anything was wrong, and therefore didn’t check in.

Instead of fire drills, a better comparison might be to the “duck and cover” drills of the Cold War, when the baby boomers who are now forming public policy had to hide under their desks for fear of The Big One, courtesy of the Communists, who may or might not have been a bigger threat than the hypothetical gunmen we’re talking about. The perceived risk of nuclear attack was always higher than the actual risk, even during the Cold War. Keep in mind that there were only ever two nuclear bomb attacks on anybody anywhere, neither of them by a Communist regime. Even the Cuban Missile Crisis, we are now learning, was a long way from the near-annihilation that was in the papers. When I was in the Army, our field manual showed us the response to a nuclear blast, which was to lie down on the ground and point our helmets at the mushroom cloud. The whole thing is absurd, and is (mostly) remembered by sane people as absurd. My feeling is that these drills will be too, once we’ve either calmed down or moved on to the next paranoid delusion to grip our fragile minds. Remember, we’re talking about either things that essentially, statistically, DO NOT HAPPEN, or whose risks are in the millions to one against, which is more or less the same thing.

6. A Lack of Emergency Preparedness Sank the Titanic, Didn’t It?

A criminal type of insouciance led the Titanic’s owners not to anticipate disaster, and not provide enough lifeboats for all the passengers, with tragic consequences. They claimed that having lifeboats on board and emergency drills would cause undue panic. Doesn’t that prove the need for such legitimate drills? If not this, what are the legitimate reasons a lockdown might take place?

Using the term ‘legitimate lockdown’ is unfortunately tautological. The effectiveness of lockdowns is what is under question here. Again, what assailants are we talking about here? Who are they? We are talking hypotheticals. “What if” is rarely a useful question to ask when assessing risk. Seriously, who are we afraid of? Once we identify them, we can figure out whether they’re worth worrying about.

I also don’t see that the analogy to the Titanic is warranted. Arrogance, not adherence to facts, made them under-supply the ship with lifeboats. I’m not advocating that we do nothing in the interests of security. I’m just saying we need to look carefully at what is reasonable, and address issues that actually 1. happen , and 2. we can do something about. The Titanic is actually a good example of NOT taking a reasonable precautions to risk. It was not actually all that unlikely that lifeboats would be needed, and certainly if they were needed at all, everybody would need one. Even in modern times, “Two large ships sink every week on average,” says Wolfgang Rosenthal, of the GKSS Research Centre in Geesthacht, Germany. That’s about 100 every year. I imagine it was even worse leading up to 1912. The line about causing undue panic seems like somebody’s excuse for bad planning.

In fact, one might argue that it was complacency that was created by newfangled security measures (the system of bulkheads) that sank the Titanic. They had security measures in place, and due to an extremely unlikely turn of events, those weren’t effective — whereas the backup safety measures that might have saved people’s lives were ignored because of a feeling that safety concerns had already been addressed. Complex interrelationships of unlikely events, poor decisions, and human failings sank the ship, and those are things that are extremely difficult to plan for. That said, again, the reason we all know about the Titanic in the first place is because of the phenomenally unlikely circumstances that led to the incident, as well as the press reaction to the event. It was in the news because it was rare.

7. Don’t Spout Statistics: These Are People’s Lives!

Precisely. So let’s start thinking honestly and rationally about what puts them in danger, and then deal with them effectively. So, once again, let’s get some perspective. We are, by all accounts, the safest people ever to walk the planet. But this seems to make us adjust our criteria for risk downward, filling in the anxiety gap with more and more trivial worries. We are the most risk-averse society that I have ever heard or read of. Although the possibility of reaching zero risk is impossible, a study by a professor at Ottawa U. finds that most Canadians think that it is possible. Not only that, they expect the government and institutions to provide it for them (!) Considering how important risk is to normal cognitive and social development, I find this very troubling as an educator.

There are good examples of how realistic awareness of risk might have prevented tragedy. In addition to the statistic I quoted above, where the majority of traffic injuries involving children are caused by anxious parents driving their precious bundles to school, there are others. This one is for everyone who thinks that we ought to have a plan to deal with emergencies: You’re right. I am all for safety. The question is a matter of finding out what actually makes us unsafe, and then dealing with those things in a way that actually improves safety, while not compromising quality of life more than is necessary.

The TSA, for example. I don’t think anyone has successfully shown that the horrorshow that is U.S. customs and security actually adds much to actual safety. It detracts way more from quality of life, dignity, privacy, and common decency than it adds to safety. And the issue that it supposedly addresses, while terrible and frightening, is astronomically remote. Terrorism is down, too, if anyone’s wondering (with the exception of within the state of Israel). In order for taking a plane to be even close to as dangerous as driving (which we all do, and NEVER seem to question it, despite the recent seeming glut of people being mowed down by cars in the city in which I live and work), terrorists would have to hijack and crash a plane a week for months, AND you would have to get on a plane daily.

In fact (getting to the point), after 9/11, many people cancelled flights out of fears of crashing planes, and got into cars to take their trips. Someone crunched the numbers and found out how many people died unnecessarily in car crashes as a direct result of that decision: Turns out it was close to 1600, or about half the total life cost of 9/11, including the terrorists. It’s six times the number of people on the planes that crashed. That’s 1600 people who tried to make the right decision, but are dead because they didn’t take the actual facts into account.

It just seems like instead of reacting to risk, we could respond to it and try to make sure that what we do to address it doesn’t either miss the boat or make things worse. Safe is good, but we have to define our terms. Driving feels safer than flying, because we think we’re in control, but it is one of the single most dangerous activities we can partake in, unless we’re deepsea divers or active-service paratroopers. I’m all for CPR and first aid. The chances of needing those skills are actually quite high: in the U.S., heart disease killed 700, 142 people in 2001. If they SEEM rare, that’s part of our perceptual blind spot. Things that seem rare or safe are often quite dangerous, and things that seem (emotionally?) to be dangerous are often not worth the angst.

8. Risk and the media

Much of our risk aversion comes from the “if it bleeds, it leads” mentality of media coverage of events. A lot more of it comes from lawyers. Statistically, I would bet that your chances of being sued for some improbable event would greatly outweigh the chances of the original event happening in the first place. I have, though, weirdly enough, also read articles that suggest that the number of frivolous lawsuits actually brought to court in North America are much smaller than most people assume – there seems to be some evidence that the insurance companies are actively encouraging a sense of the overwhelming use of frivolous lawsuits in popular culture so that they can justify higher rates. On top of that, a major beneficiary of the Cult of Fear is the horde of manufacturers of Safety Products, who prey on irrational paranoia. Free Range Kids details some of the more egregious examples of a manufactured crisis with expensive manufactured cures: baby kneepads, for instance, for crawling tots. As if thousands of generations of infants had evolved to crawl “unsafely”, just waiting for the right product to correct nature’s deficiencies. Sigh. So, in answer to my earlier question of Cui Bono: “Too many dubious sorts of people”.

One of the major factors that affect our minds’ perception of risk is what Daniel Gardner, who wrote a book on the subject and spoke eloquently at a lecture I attended a couple of years ago, calls a “feedback loop” generated by media. The original noise is picked up and amplified in a kind of echo chamber, and this escalates the brain’s response to threat in ways that we could never have without the media’s involvement. Reporters are people too, and their risk assessment tools are just as terrible as the rest of ours. Their choices, though, have social effects that are wide-reaching.

In Canada, where I live, there was a news story recently that, though local in nature, became a national story: a doctor at a clinic in Ottawa had improperly sterilised her colonoscopy equipment, and around 5 000 ex-patients were being contacted by letter, informing them of a remote risk of infection by Hepatitis or HIV. The odds against HIV infection were more than a billion to one against, which so far exceeds the “de minimis” rule that it kind of shocked me that it was even mentioned. The media went nuts, probably because of the shock value attached to AIDS-related material. The authorities hesitated to contact the media at all about the matter, perhaps knowing what kind of a zoo it would become. When the media got hold of that fact, of course, it was played to the hilt. It was made to look like a conspiracy; at the very least, the mostly-risk-uneducated public felt that they were being patronised.

The next bit of newsworthy material that came out of this story was that clinics around the country were worried about cancellations of important colonoscopic procedures by people who had heard the news item and lost confidence in the procedure, thereby creating a real risk due to undiagnosed conditions that could easily eventually become fatal. This was creating a situation somewhat reminiscent of the anti-vaccine movement that puts thousands of people at risk based on ignorance, fear, and bad science.

I’ll add this: Information is not automatically a good thing, though I definitely want as much as possible in order to make decisions. How we use that information to make decisions is just as important. I partly agree with those who say that the witholding of information can seem patronising, but since the medium is the message, how that information is disseminated and presented is enormously influential. The Feedback Loop that Gardner describes is an unfortunate, but real, byproduct of the way media produces stories. And until schools’ curricula start to focus a lot more (as we have hopefully begun to do) on things like cognitive blind spots, logical fallacies, analysis of information, correcting lazy thinking, etc., and until politicians’ use of language is held to a higher standard, we’re going to have to deal with a question mark as to how people are going to react to risk.